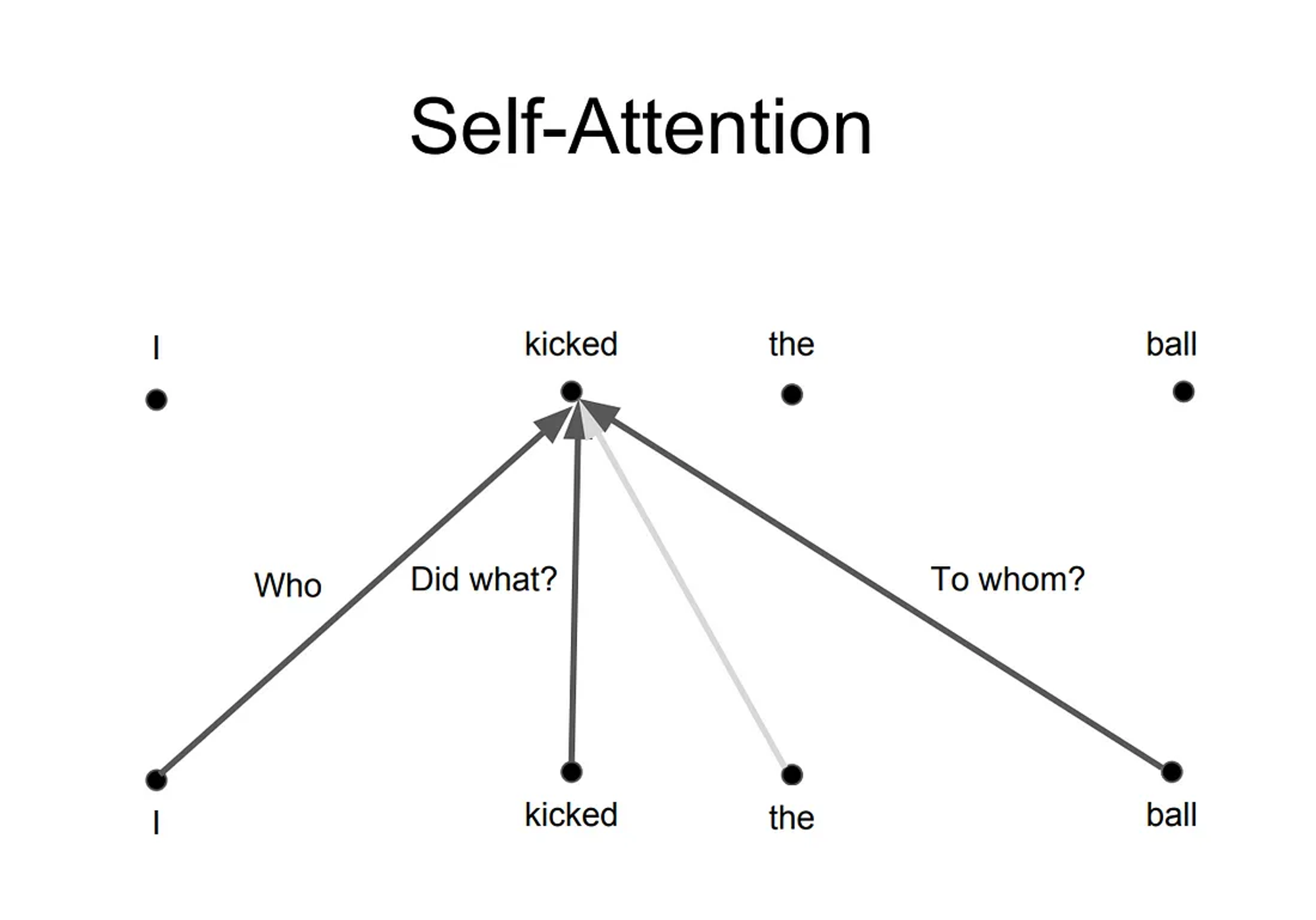

Transformers in Large Language Models (LLMs) work by processing text through layers that handle both the sequential nature of language and long-range dependencies using self-attention mechanisms.The Big PictureImagine you are trying to understand a complex story with multiple characters and subplots. If you were to read it linearly, you might miss important connections between events. Instead, y..